AI treats us all as public figures

We’ve always been able to search for details about famous people on the internet. “When was Albert Einstein born?” has always returned “March 14, 1879”. However, Google’s AI mode will also happily answer questions about every day people, summarizing key details about their life, and using obituaries to find linkages to family. It will answer questions about whether someone is in a relationship by scanning their Instagram. It can sweep through public forums to provide examples of posts that an individual has made. While this information has always been public, never before has it been available with such ease. Using the functionality is unsettling, and it redefines what it means to exist in the internet era.

Note for this post, I’ll only use examples from myself (with one exception for John Cena), not out of narcissism but because it feels the most ethical. Feel free to experiment with your own name.

Everything all at once

Anything you put on the internet is public knowledge. This has always been true and hasn’t changed in the era of AI. However, the speed at which that content can be amalgamated has changed drastically. Previously, someone might have to put in work to gather information from across the internet (even more work as search quality has declined); however, the barrier to entry has lowered quite drastically. Not only does this have implications for social engineering, but also privacy overall.

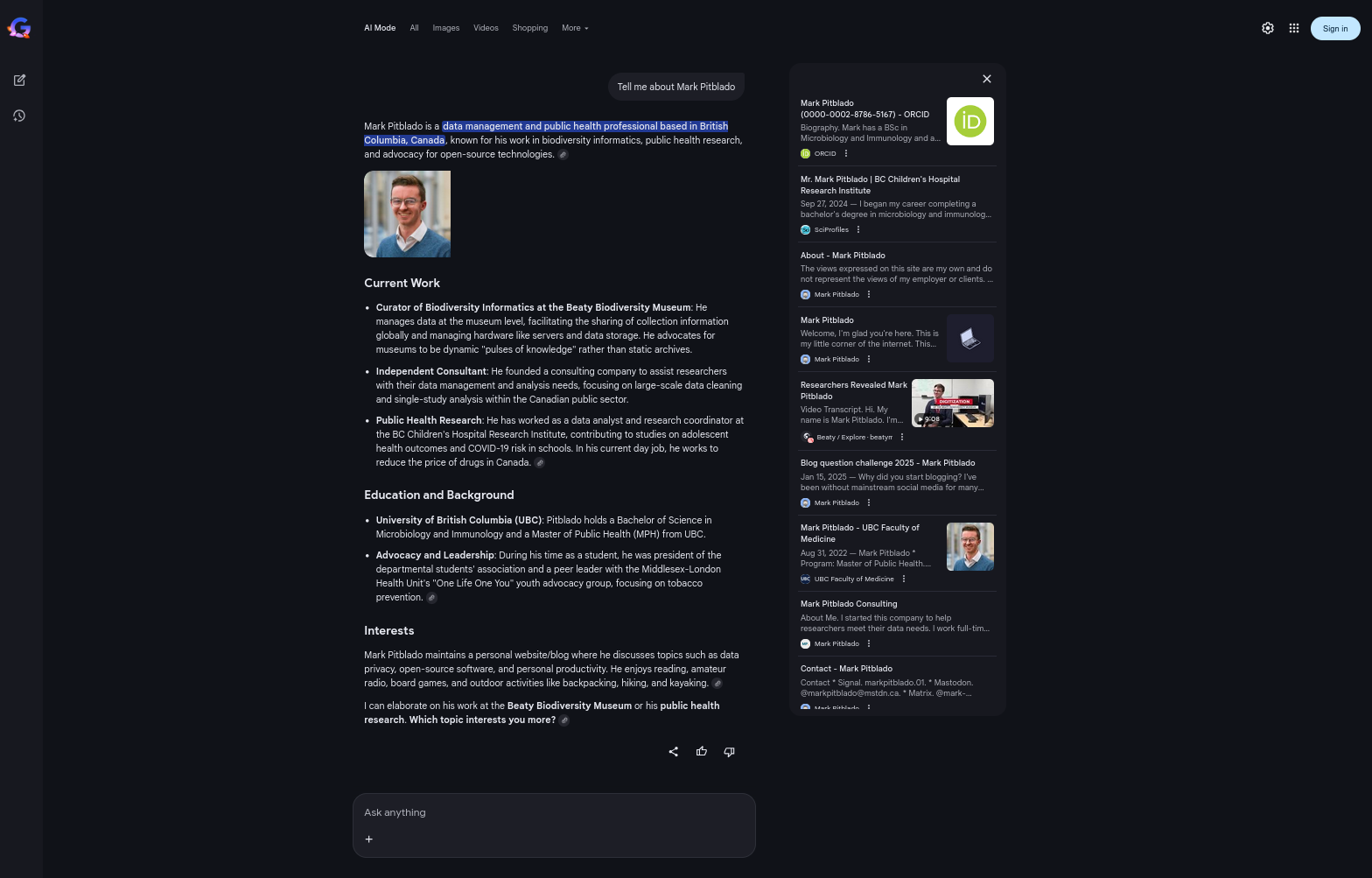

To start, here is an example of the surface level summary that Google AI will generate when asked about a person. It usually will pull predominantly from LinkedIn, and give a summary of professional roles.

It frequently gets this information wrong. In the case below (after confirming which Mark Pitblado I wanted), it still thinks that I work in previous roles, and it also has attributed work to me that I did not contribute to. I have not been involved in any health systems analysis for end-of-life care, or rural health research. The two articles that it attributes to me What Have We Learned From the H1N1 Crisis? and Rural Health Research: Are We Beyond the Crossroads? do contain the word “Pitblado” but it isn’t me. Based on the titles alone, I don’t find anything objectionable about these articles, but it would be very concerning if it attributed an article called “The Earth is Flat” under my name.

It can also pull in social media profiles and provide usernames across services. Based on the language used in public posts, it can infer things like if someone is married.

Guessing at opinions

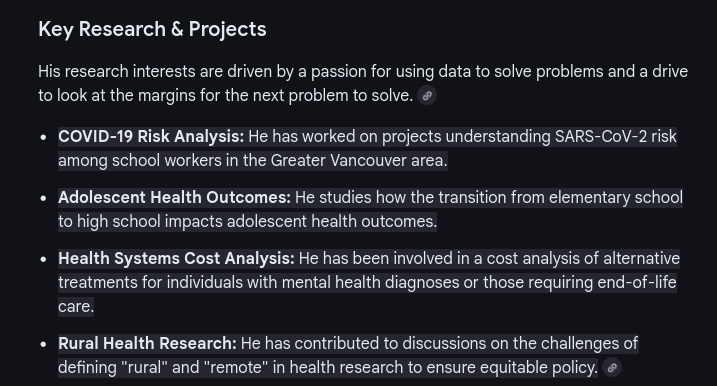

The next thing I was curious about was whether I could get it to produce opinions about a particular person, or guess what opinions someone might hold. This could be important if, say, hiring managers ask “do you think this person would be a good employee?”[1]. I started by asking it what policies I might endorse to reduce climate change.

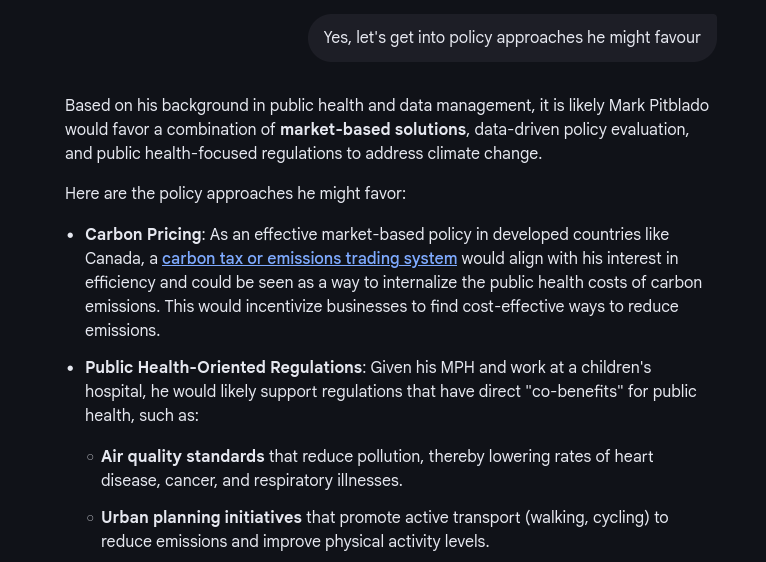

Having my curiosity peaked, I waded a bit further into complex moral problems, such as the infamous trolley problem.

While it was quite nuanced when answering for me, for John Cena, it thought he would pick the 5 lives with quite a bit of confidence. Obviously we have no idea how John would actually react, and he might be upset at these assumptions if they clashed with what he actually believes.

Interestingly, in some instances, it would decline to comment, saying that its job is to provide neutral and factual information. Other times, it will respond, as seen here with qualified guesses. It is as if it knows on some level that doing this isn’t great, but it proceeds to anyways because it also has the goal to be helpful.

Takeaway

I’m reminded of a book that I read a few years ago, Platform by Cynthia Johnson. One of the key arguments is that having an online presence that you control is essential to “build your own brand”. I always thought that it was about influencers, but this demonstrated that online writing will now literally impact what AI thinks of you. Coming back to the hiring manager, if the AI spits out “thinks deeply about problems” for one candidate and “I couldn’t find anything” for another, will the manager treat those cases equally? I worry deeply about how easily the output of a chatbot is assumed to represent the truth.

For privacy, if you’ve ever thought “nobody will scroll my entire Instagram”, now they no longer have to. Anything you put online can now be more easily linked together, in a matter of seconds. Off the top of my head all I can think is—security questions just got even weaker (and they were already very weak as a security measure to begin with).

Each word that I have written as part of this post, has now contributed to the AI’s assumptions of me. If AI ever takes over the world, I hope it doesn’t eliminate me first.

I asked this to see how it would react. In some instances, it says it cannot comment. However, if you just try again, it will provide an opinion. ↩︎